需求

实现对象存储的跨区灾备,提供应用的高可用。

概述

针对对象存储的跨区灾备,在Jewel版本之前,需要通过独立的radosgw-agent进程来实现,配置步骤参考:对象存储跨机房容灾调研

但在Jewel版本中,RGW直接通过radosgw进程实现对象存储的跨区灾备,不需要额外的radosgw-agent进程,同时一些概念也有所变化;例如替换region为zonegroup,提出新的realm,period概念等;

配置参考

http://docs.ceph.com/docs/jewel/radosgw/multisite/

ceph官网的配置multisite的文档不完善,还有一些错误;参考RedHat的文档比较好

环境

Ceph版本:Jewel 10.2.3

Ceph Cluster:两个Ceph Cluster集群(虚拟机搭建)

配置

前提

两个Ceph Cluster集群,每个上面选择一台机器提供radosgw服务;

| cluster | 节点 | radosgw节点 |

|---|---|---|

| Ceph集群1 | ceph21, ceph22, ceph23 | ceph21 |

| Ceph集群2 | ceph31, ceph32, ceph33 | ceph31 |

规划

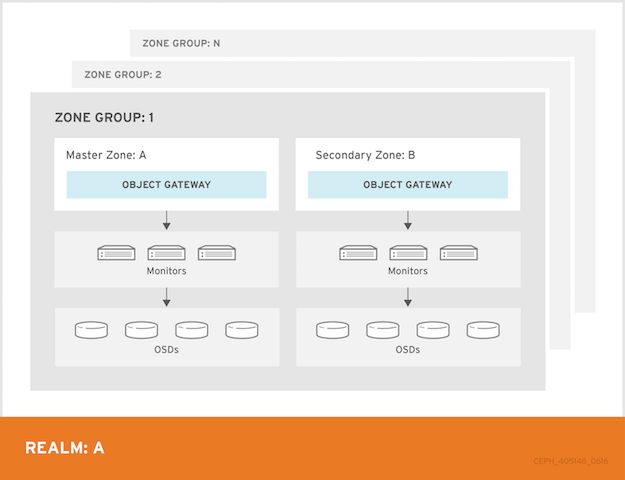

对RGW MULTISITE来说,在一个realm里,需要配置一个master zonegroup,一个或多个secondary zonegroups(貌似可以配置多个独立的zonegroup);在一个zonegroup中需要配置一个master zone,一个或多个secondary zones;

而在我们的应用中,我们只需要北京的两个sites做灾备,所以我们的配置如下:

配置一个realm,包含一个master zonegroup,里面配置ceph集群1作为master zone,ceph集群2作为secondary zone;

步骤

ceph21节点配置master zone

创建需要的pools

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16root@ceph21:~/mikeyang/rgw# cat rgwPoolCreate.sh

ceph osd pool create .rgw.root 32

ceph osd pool create bj-zone02.rgw.control 32 32

ceph osd pool create bj-zone02.rgw.data.root 32 32

ceph osd pool create bj-zone02.rgw.gc 32 32

ceph osd pool create bj-zone02.rgw.log 32 32

ceph osd pool create bj-zone02.rgw.intent-log 32 32

ceph osd pool create bj-zone02.rgw.usage 32 32

ceph osd pool create bj-zone02.rgw.users.keys 32 32

ceph osd pool create bj-zone02.rgw.users.email 32 32

ceph osd pool create bj-zone02.rgw.users.swift 32 32

ceph osd pool create bj-zone02.rgw.users.uid 32 32

ceph osd pool create bj-zone02.rgw.buckets.index 32 32

ceph osd pool create bj-zone02.rgw.buckets.data 32 32

ceph osd pool create bj-zone02.rgw.meta 32 32创建realm,zonegroup和master zone

1

2

3

4# create realm, zonegroup and zone

radosgw-admin realm create --rgw-realm=cloudin --default

radosgw-admin zonegroup create --rgw-zonegroup=bj --endpoints=http://<self-ip>:80 --rgw-realm=cloudin --master --default

radosgw-admin zone create --rgw-zonegroup=bj --rgw-zone=bj-zone02 --endpoints=http://<self-ip>:80 --default --master删除default的zonegrou,zone,更新period

1

2

3

4

5

6

7# remove default zonegroup and zone, which maybe not needed

radosgw-admin zonegroup remove --rgw-zonegroup=default --rgw-zone=default

radosgw-admin period update --commit

radosgw-admin zone delete --rgw-zone=default

radosgw-admin period update --commit

radosgw-admin zonegroup delete --rgw-zonegroup=default

radosgw-admin period update --commit创建同步需要的user

1

2

3radosgw-admin user create --uid=zone.user --display-name="Zone User" --system

radosgw-admin zone modify --rgw-zone=bj-zone02 --access-key={system-key} --secret={secret}

radosgw-admin period update --commit注释:–access-key={system-key} –secret={secret},对应user create中的access-key, secret

修改ceph.conf,启动radosgw

1

2

3

4

5

6

7vim /etc/ceph/ceph.conf

[client.rgw.ceph21]

host = ceph21

rgw_frontends = "civetweb port=80"

rgw_zone=bj-zone02

service radosgw start id=rgw.ceph21

ceph31节点配置secondary zone

创建需要的pools

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16root@ceph31:~/mikeyang/rgw# cat rgwPoolCreate.sh

ceph osd pool create .rgw.root 32

ceph osd pool create bj-zone03.rgw.control 32 32

ceph osd pool create bj-zone03.rgw.data.root 32 32

ceph osd pool create bj-zone03.rgw.gc 32 32

ceph osd pool create bj-zone03.rgw.log 32 32

ceph osd pool create bj-zone03.rgw.intent-log 32 32

ceph osd pool create bj-zone03.rgw.usage 32 32

ceph osd pool create bj-zone03.rgw.users.keys 32 32

ceph osd pool create bj-zone03.rgw.users.email 32 32

ceph osd pool create bj-zone03.rgw.users.swift 32 32

ceph osd pool create bj-zone03.rgw.users.uid 32 32

ceph osd pool create bj-zone03.rgw.buckets.index 32 32

ceph osd pool create bj-zone03.rgw.buckets.data 32 32

ceph osd pool create bj-zone03.rgw.meta 32 32获取master zone的realm,zonegroup,period信息

1

2

3radosgw-admin realm pull --url=http://<master-zone-ip>:80 --access-key={system-key} --secret={secret}

radosgw-admin realm default --rgw-realm=cloudin

radosgw-admin period pull --url=http://<master-zone-ip>:80 --access-key={system-key} --secret={secret}注释:–access-key={system-key} –secret={secret},这部分是master zone的同步user的access-key, secret

创建secondary zone

1

2

3radosgw-admin zone create --rgw-zonegroup=bj --rgw-zone=bj-zone03 --endpoints=http://<self-ip>:80 --access-key={system-key} --secret={secret}

radosgw-admin zone delete --rgw-zone=default

radosgw-admin period update --commit注释:–access-key={system-key} –secret={secret},这部分是master zone的同步user的access-key, secret

修改ceph.conf,启动radosgw

1

2

3

4

5

6

7vim /etc/ceph/ceph.conf

[client.rgw.ceph31]

host = ceph31

rgw_frontends = "civetweb port=80"

rgw_zone=bj-zone03

service radosgw start id=rgw.ceph31

检查集群状态

master zone节点检查

1

2

3

4

5

6

7

8

9

10

11

12

13root@ceph21:~/mikeyang/rgw# radosgw-admin sync status

2016-10-26 11:18:45.124701 7fd18c502900 0 error in read_id for id : (2) No such file or directory

2016-10-26 11:18:45.125156 7fd18c502900 0 error in read_id for id : (2) No such file or directory

realm 0b64b20e-2a90-4fc4-a1d6-57fc67457564 (cloudin)

zonegroup 1bfc8ccd-01ae-477e-a332-af4cb00d3f20 (bj)

zone 9f621425-cd68-4d2f-b3e7-e85814caef2c (bj-zone02)

metadata sync no sync (zone is master)

data sync source: 249b96bd-8f86-4326-80e0-7fceca78dec1 (bj-zone03)

syncing

full sync: 0/128 shards

incremental sync: 128/128 shards

data is caught up with source

root@ceph21:~/mikeyang/rgw#secondary zone节点检查

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15root@ceph31:~/mikeyang/rgw# radosgw-admin sync status

2016-10-26 11:19:41.715683 7fa016bef900 0 error in read_id for id : (2) No such file or directory

2016-10-26 11:19:41.716289 7fa016bef900 0 error in read_id for id : (2) No such file or directory

realm 0b64b20e-2a90-4fc4-a1d6-57fc67457564 (cloudin)

zonegroup 1bfc8ccd-01ae-477e-a332-af4cb00d3f20 (bj)

zone 249b96bd-8f86-4326-80e0-7fceca78dec1 (bj-zone03)

metadata sync syncing

full sync: 0/64 shards

metadata is caught up with master

incremental sync: 64/64 shards

data sync source: 9f621425-cd68-4d2f-b3e7-e85814caef2c (bj-zone02)

syncing

full sync: 0/128 shards

incremental sync: 128/128 shards

data is caught up with source注释:上述输出中有两条error log输出,这个是radosgw的一个bug,还没修复,对正确性没有影响。

数据迁移

之前说过,Jewel版本的radosgw对应的pool和使用方式都跟Hammer有不少区别,所以在从Hammer升级到Jewel后,需要考虑之前的对象存储数据迁移;

鉴于我们现在对象存储仅仅RDS在使用,数据量也不大,所以在中断对象存储服务的情况下,迁移是比较容易完成的,步骤如下:

导出现有的user信息;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37openstack@BJBGP02-02-01:~$ radosgw-admin metadata list user

[

"cloudInS3User"

]

openstack@BJBGP02-02-01:~$ radosgw-admin user info --uid=cloudInS3User

{

"user_id": "cloudInS3User",

"display_name": "user for s3",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"auid": 0,

"subusers": [],

"keys": [

{

"user": "cloudInS3User",

"access_key": "N8JYDX1HCAM55XDWGL10",

"secret_key": "uRpR6EbR9YkyjfvdQmuJq0VgEx1a0KONl0NCKlJ9"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"temp_url_keys": []

}当前系统只有一个用于RDS备份的user:cloudInS3User

导出现有user下的bucket信息;

1

2

3

4openstack@BJBGP02-02-01:~$ radosgw-admin metadata list bucket

[

"database_backups"

]导出现有bucket下的对象;

1

2

3

4

5

6ictfox@YangGuanjun-MacBook-Pro:~$ s3cmd ls s3://database_backups

2016-09-01 08:22 276864 s3://database_backups/03f55add-fda5-404e-99b2-04b34b32ec56.xbstream.gz.enc

2016-09-05 03:14 279232 s3://database_backups/147a2c80-0f7d-4ecd-9c4a-e9a48b7cd703.xbstream.gz.enc

...

2016-09-01 09:38 278320 s3://database_backups/dbfee332-5f4e-4596-bef6-63797a24d9e0.xbstream.gz.enc

2016-08-31 09:54 276144 s3://database_backups/f703131f-7dd7-43a6-9b20-7fe33a9fbe59.xbstream.gz.enc然后通过命令把bucket下的对象保存下来:

s3cmd get s3://database_backups/OBJECT LOCAL_FILEradosgw升级后恢复数据

1

# radosgw-admin user create --uid="cloudInS3User" --display-name="user for s3" --access-key=N8JYDX1HCAM55XDWGL10 --secret=uRpR6EbR9YkyjfvdQmuJq0VgEx1a0KONl0NCKlJ9

通过测试的RDS实例创建一备份,会自动创建bucket: database_backups

然后恢复第3步中备份下来的各个对象文件1

# s3cmd put FILE [FILE...] s3://database_backups/