当前状况

当前我们仅仅通过cinder创建type,指定type的IO限制来限制不同类型的云硬盘的IO值。

具体方案和调研,参考:云硬盘动态限速调研

目的

- 充分利用系统的资源,在系统负载和

Ceph负载小的情况下,动态调整云硬盘的IO值,给用户提供更好的体验。 - 设计一个框架,能满足云硬盘动态限速的要求;以后也能方便的加入网络的动态限速;

框架设计

需求

- 统一配置选项

- 模块化设计,能方便添加别的动态限速需求

- 统一调度,各物理节点独立运行模式

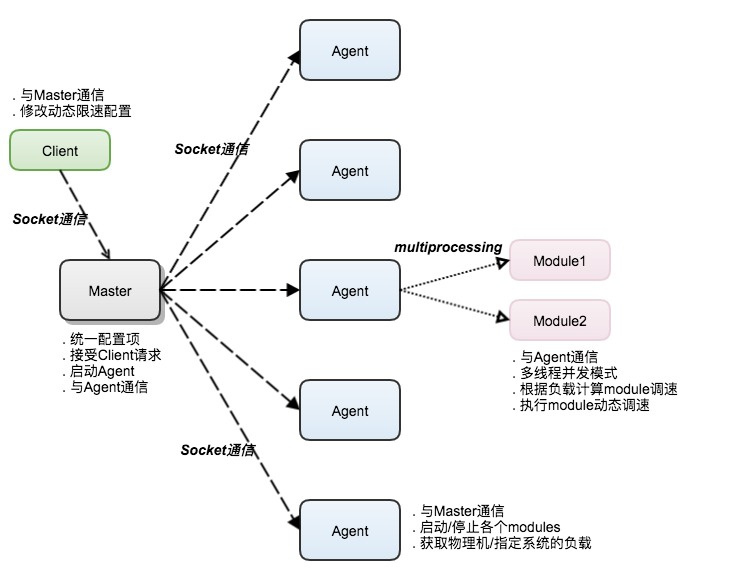

方案1

分散式架构设计,独立实现,各物理节点独立负责监控负载和动态限速;

优点:

- 独立实现,比较简洁

- 物理节点单独计算负载,通信量减少

Master只需要维护配置项,可在内存中维护该数据

缺点:

- 不能与现有监控系统结合

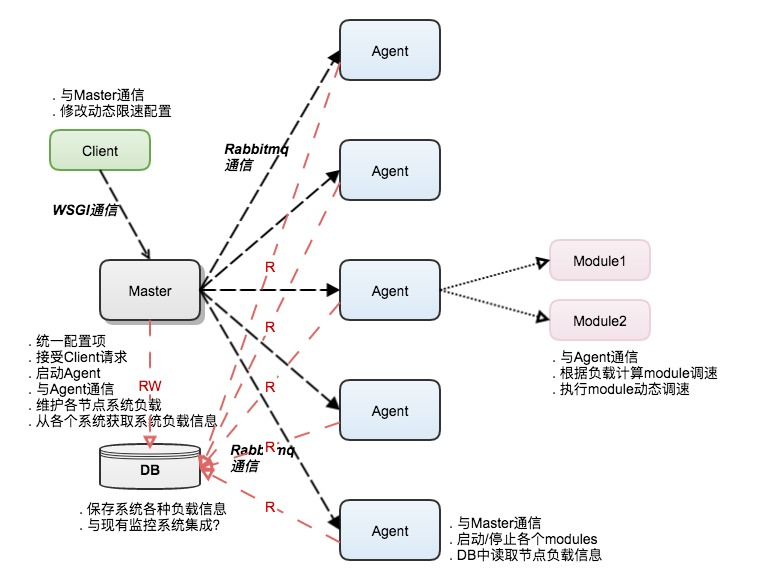

方案2

结合Openstack的设计,Master端集中维护系统负载,存入DB,Master-Agent通过Rabbitmq通信;

优点:

- 结合

Openstack的DB,rabbitmq,rpc通信,wsgi等模块 - 系统负载信息通过

DB统一维护 - 方便与现有监控系统集合

缺点:

- 结合

Openstack的几个通用模块,代码量和工作量比较大 - 系统负载信息集中保存到DB,当前看是没有必要性

方案选择

个人倾向 方案1,不需要把openstack的各个模块引入进来,实现会相对简单,也满足我们项目的需求;

云硬盘限速部分设计

概述

前面在调研中指出,我们可以在nova instance具体的物理机上,通过virsh的命令调整虚拟机指定云硬盘的io限制。

所以具体执行动态限速的命令需要在nova instance的物理机上执行,也就是说每个运行nova instance的物理机需要跑一个限速的agent进程。

我们期望云硬盘动态限速,会受到以下两个因素影响:

- 物理机的负载

- 云硬盘对应

Ceph Pool的IO负载

所以比较简单的设计为:

每个Agent单独运行,负责本物理机上的nova instances的云硬盘IO动态调整。Agent可以获取本机的实时负载和Ceph对应Pool的IO负载。

考虑的因素

- 通过配置可以关闭/开启动态限速

- 支持白名单方式

- 支持黑名单方式

- 通过配置指定支持的云硬盘类型

- 通过配置指定动态限速模式:

- 物理机负载 +

Ceph Pool负载 - 物理机负载

Ceph Pool负载

- 物理机负载 +

- 配置动态调整限速间隔

- 配置指定类型云硬盘动态限速的范围:

[begin, end]

配置项示例

1 | [DEFAULT] |

代码中读取上诉配置,在脚本中生成conf的字典信息;

涉及到的命令与解释

1)、获取系统cinder volume的信息

cinder list --all | grep "in-use" | awk '{print $2,$4,$8,$12}'

1 | (.venv)openstack@Server-01-01:~$ cinder list --all | grep "in-use" | awk '{print $2,$4,$8,$12}' |

2)、找到配置文件中user对应的 Tenant ID

openstack project list | grep "user-id"

1 | openstack@Server-01-01:~$ openstack user list | grep usr-kndz857b |

3)、列出本物理机上的virsh instances

virsh list:list domains

1 | openstack@Server-01-01:~$ virsh list |

4)、列出指定virsh instances的所有blocks

virsh domblklist <domain>:list all domain blocks

1 | openstack@Server-01-01:~$ virsh domblklist instance-00000921 |

5)、查看/修改block device的iotune

virsh blkdeviotune <domain> <device>:Set or query a block device I/O tuning parameters

1 | openstack@Server-01-01:~$ virsh blkdeviotune instance-00000921 vdb |

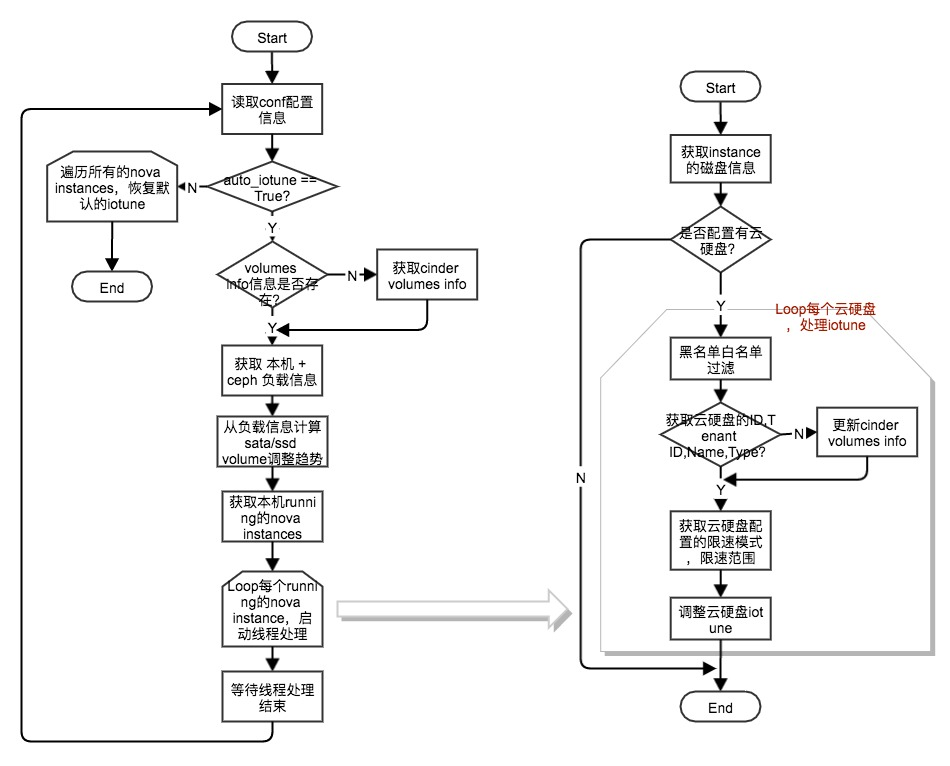

Agent框架流程

获取负载信息

1)、本机负载信息

1 | $ uptime |

获取最近1,5,15分钟负载分别为:2.53, 2.82, 2.80

2)、Ceph负载信息

1 | $ ceph osd tree |

从上述输出中获取不同性质磁盘对应的OSD如下信息:

1 | {"sata" : (0,1,2,3,4,5,6,7,...,26,27)} |

【注:这个信息是固定的,只需要获取一次即可】

1 | $ ceph osd perf |

分别获取SATA和SSD磁盘组内的平均负载信息:

1 | {"sata" : [1, 2], "ssd" : [1, 6]} |

【注:这个信息需要周期性获取】

动态调整IO限速

公式:

1 | sys_load_throttle = 20 |

loop每个instance,针对volume类型是需要增加/减少的volume,设置volume新的iotune值:

1 | 根据conf配置信息获取volume对应的bw_range和iops_range,然后调用下面的函数执行iotune调整。 |

云硬盘默认限速

在动态限速程序退出前,需要调整相关云硬盘到默认限速值,具体的默认值如下:

1 | (.venv)openstack@Server-01-01:~$ cinder qos-list |